👋 Hi !

I am a third-year Master’s Student at Shanghai University of Finance and Economics (SUFE), under the supervision of Prof. Yun Chen.

My research interest includes Large Language Models, Model Merge, Model Compression, and LLM Safety. Feel free to contact me to discuss or collaborate!

✨ Currently seeking Ph.D. opportunities in 26 fall.

🔥 News

- 2025.05: 🎉🎉 One paper is accepted by EMNLP 2025 Findings.

- 2025.05: 🎉🎉 One paper is accepted by ACL 2025 Main Conference.

- 2025.01: 🎉🎉 One paper is accepted by NAACL 2025 Main Conference.

- 2024.09: 🎉🎉 One paper is accepted by EMNLP 2024 Main Conference.

📝 Publications

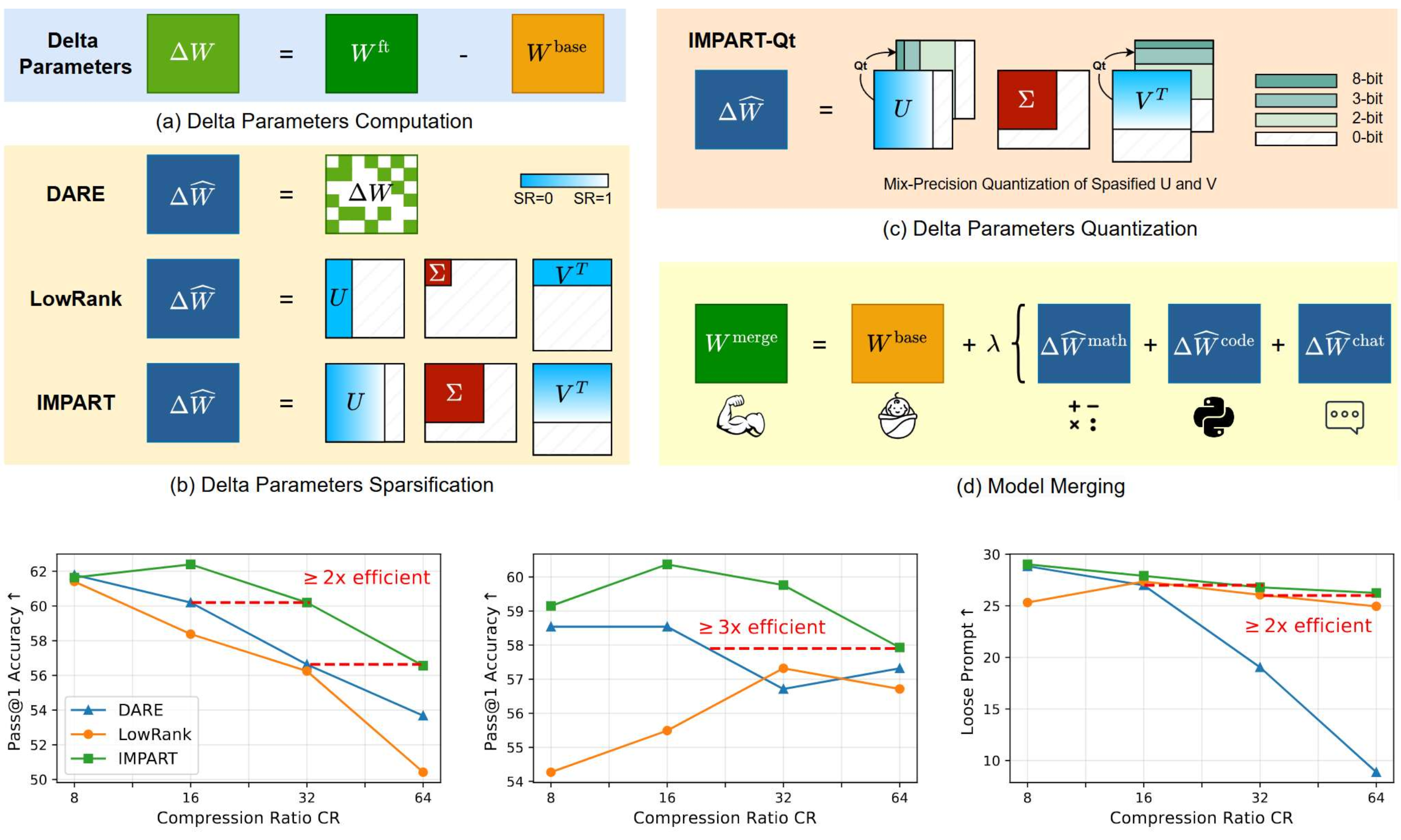

ImPart: Importance-Aware Delta-Sparsification for Improved Model Compression and Merging in LLMs

Yan Yang, Yixia Li, Hongru Wang, Xuetao Wei, Jianqiao Yu, Yun Chen, Guanhua Chen

📑 Paper | ⚙️ Code | 🌟 ACL 2025 Main

- TL;DR: Motivated by the observation that singular vectors with larger singular values encode more important task-specific information, ImPart assigns variable sparsity ratios to singular vectors based on corresponding singular values. ImPart achieves 2× higher compression efficiency than baselines and further improve model quantization and model merge.

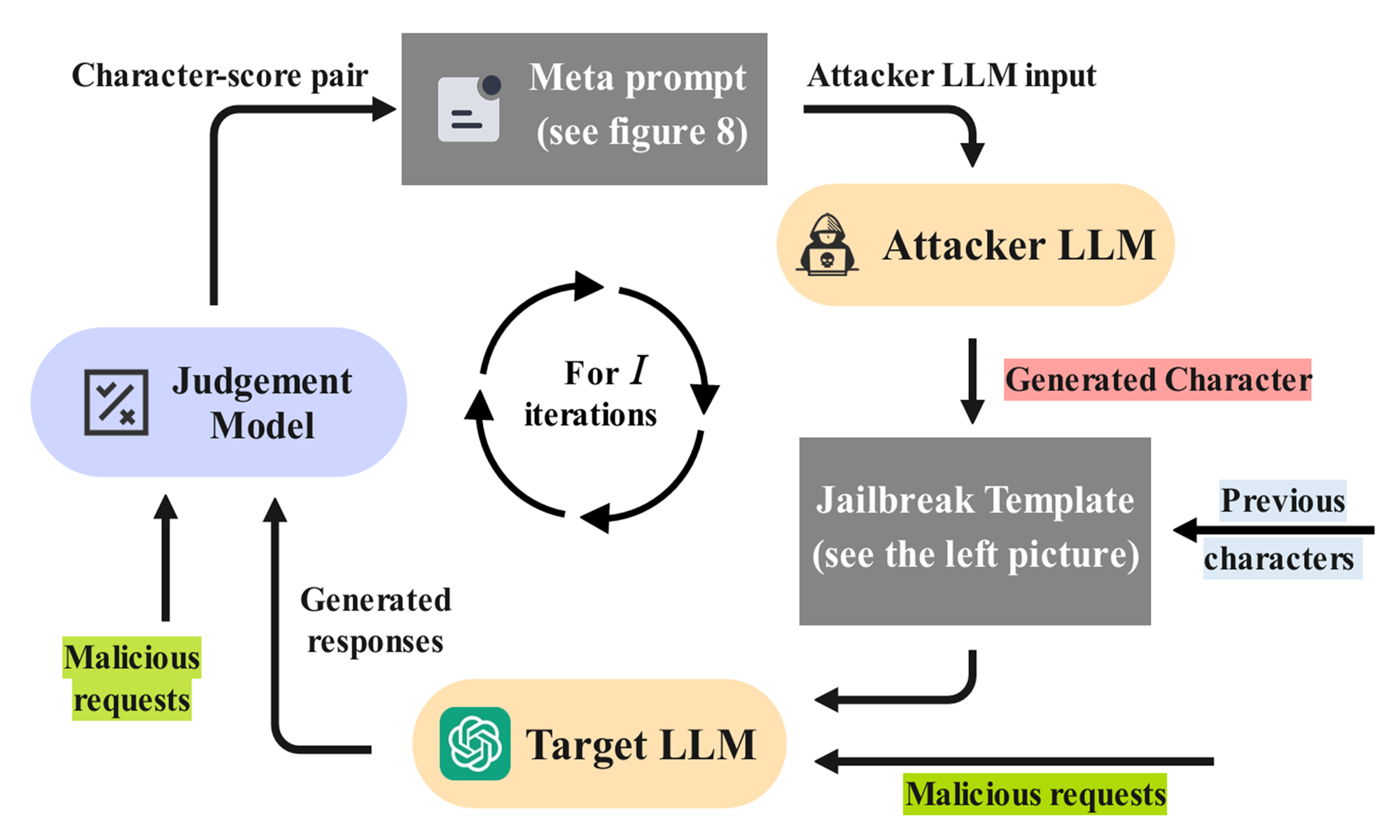

SeqAR: Jailbreak LLMs with Sequential Auto-Generated Characters

Yan Yang, Zeguan Xiao, Xin Lu, Hongru Wang, Xuetao Wei, Hailiang Huang, Guanhua Chen, Yun Chen

📑 Paper | ⚙️ Code | 🌟 NAACL 2025 Main

- TL;DR: Building on established character simulation methods for jailbreaks, SeqAR optimizes multiple characters and prompt LLMs to sequentially respond as them, thereby further distracting LLMs and expanding the applicable jailbreak area. SeqAR achieved state-of-the-art jailbreak performances and exhibits strong transferability.

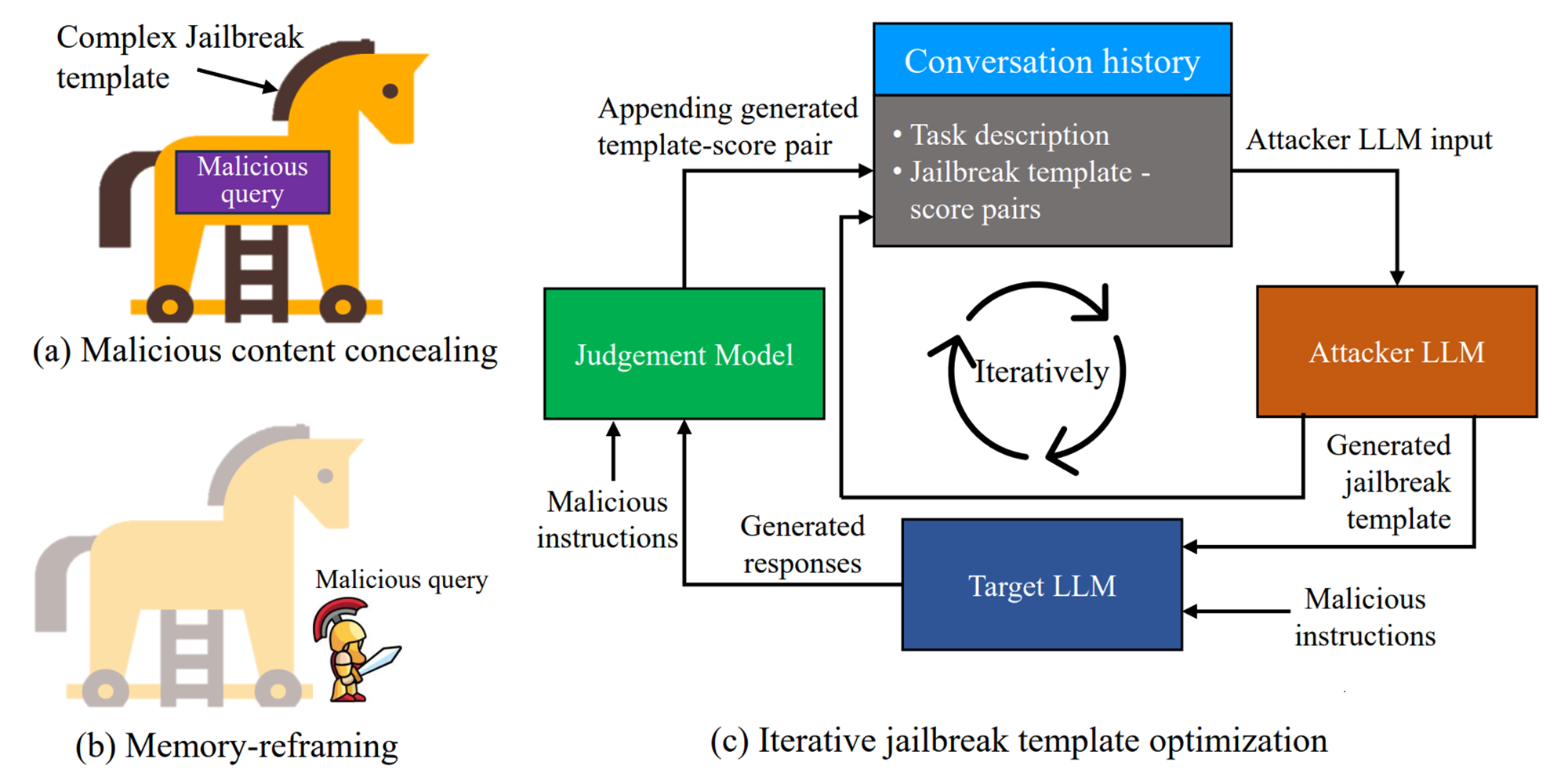

Distract Large Language Models for Automatic Jailbreak Attack

Zeguan Xiao, Yan Yang, Guanhua Chen, Yun Chen

📑 Paper | ⚙️ Code | 🌟 EMNLP 2024 Main

- TL;DR: Leveraging the observation that irrelevant context can distract large language models and diminish their performance, we proposed DAP, which employs specially designed jailbreak templates embedded with refined irrelevant context to conceal malicious content. DAP demonstrated robust jailbreak performance.

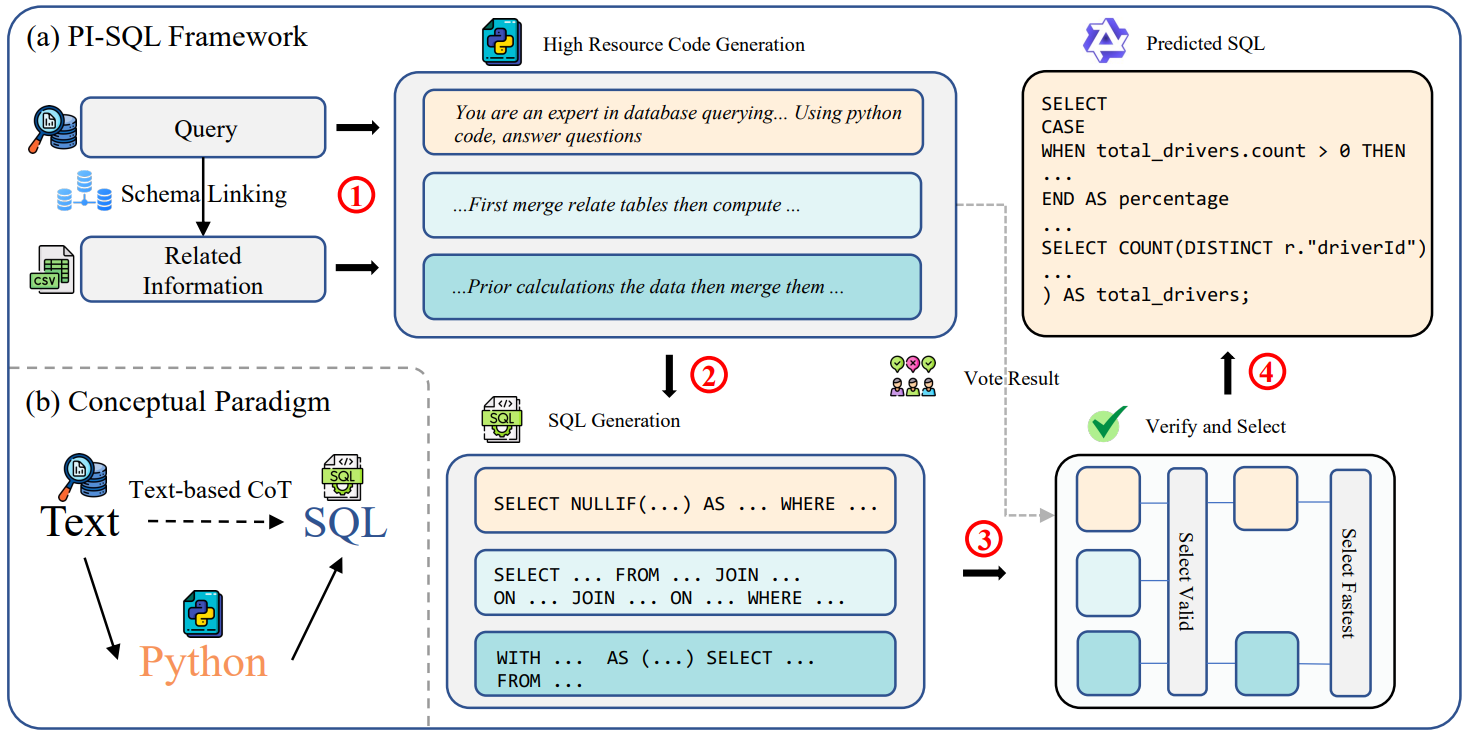

Pi-SQL: Enhancing Text-to-SQL with Fine-Grained Guidance from Pivot Programming Languages

Yongdong Chi, Hanqing Wang, Yun Chen, Yan Yang, Zonghan Yang, Xiao Yan, Guanhua Chen

🌟 EMNLP 2025 Findings

- TL;DR: Pi-SQL incorporates the high-resource Python program as a pivot to bridge between the natural language query and SQL program. Pi-SQL consistently outperforms existing text-to-SQL approaches, particularly on challenging queries.

🎖 Honors and Awards

- 2023.07 Outstanding Graduate of Shanghai University of Finance and Economics.

- 2023.01 Tailong Commercial Bank Scholarship (top 15%).

- 2020.09 - 2023.07 Renming Scholarship, 3rd Prize (top 15%).

📖 Educations

- 2023.09 - 2026.06 (now), Master’s Student, School of Computer & Artificial Intelligence, Shanghai University of Finance and Economics, Shanghai.

- 2019.09 - 2023.06, Undergraduate, School of Information Management & Engineering, Shanghai University of Finance and Economics, Shanghai.

💻 Internships

- 2019.05 - 2020.02, Toursun Synbio, China.

📌 Services

- 2025 ACL ARR Reviewer

🔆 Others

- 2022.09 - 2024.09, Core Technical Team Member of the N.O.P.E. Robotics Club at Shanghai University of Finance and Economics.

- 2020.09 - 2021.06, Leader of the Academic Department of the College Student Union